Elon Musk pushed to use Tesla’s internal driver monitoring camera to record video of drivers’ behavior, primarily for Tesla to use this video as evidence to defend itself from investigations in the event of a crash, according to Walter Isaacson’s new biography of the Tesla CEO.

Walter Isaacson’s biography of Elon Musk is out, resulting in several revelations about Tesla’s past, present, and future. One of these revelations is a potential use for the Tesla internal driver monitoring camera that is included on current Teslas.

Many cars have a camera like this to monitor driver attentiveness and warn a driver if they seem to be paying too little attention to the road, though other automakers typically use infrared cameras and the data never leaves the car.

Teslas have had these cameras for many years, first showing up on the Model 3 in 2017 and later on the S/X, but they weren’t activated until 2021. Before that, Tesla determined attention by detecting steering wheel torque (a safety that was pretty easy to defeat).

Nowadays, the camera is used to ensure that drivers are still watching the road while Autopilot or FSD are activated, as both systems are “Level 2” self-driving systems and thus require driver attention. The hope, though, was to potentially use the camera for cabin monitoring if Tesla’s robotaxi dream is ever realized.

But that wasn’t the only thing Tesla wanted to use the cameras for. According to the biography, Musk pushed internally to use the camera to record clips of Tesla drivers, initially without their knowledge, with the goal of using this footage to defend the company in the event of investigations into the behavior of its Autopilot system.

Musk was convinced that bad drivers rather than bad software were the main reason for most of the accidents. At one meeting, he suggested using data collected from the car’s cameras—one of which is inside the car and focused on the driver—to prove when there was driver error. One of the women at the table pushed back. “We went back and forth with the privacy team about that,” she said. “We cannot associate the selfie streams to a specific vehicle, even when there’s a crash, or at least that’s the guidance from our lawyers.”

– Walter Isaacson, Elon Musk

The first point here is interesting because there are indeed a lot of bad drivers who misuse Autopilot and are certainly to blame for what happens while it’s activated.

As mentioned above, Autopilot and FSD are “Level 2” systems. There are six levels of self-driving – 0 through 5 – and levels 0-2 require active driving at all times, whereas with levels 3+, the driver can turn their attention away from the road in certain circumstances. But despite Tesla’s insistence that drivers still pay attention, a study has shown that driver attention does decrease with the system activated.

We have seen many examples of Tesla drivers behaving badly with Autopilot activated, though those egregious examples aren’t entirely the issue here. There have been many well-publicized Tesla crashes, and in the immediate aftermath of an incident, rumors often swirl about whether Autopilot was activated. Regardless of whether there is any reason to believe that it was activated, media reports or social media will often focus on Autopilot, leading to an often unfair public perception that there is a connection between Autopilot and crashing.

But in many of these cases, Autopilot eventually gets exonerated when the incident is investigated by authorities. Oftentimes, it’s a simple matter of the driver not using the system properly or relying on it where they should not. These exonerations often include investigations where vehicle logs are pulled to show whether Autopilot was activated, how often it had to remind the driver to pay attention, what speed the car was driving, and so on. Cameras could add another data point to those investigations.

Even if crashes happen due to human error, this could still be an issue for Tesla because human error is often a design issue. The system could be designed or marketed to better remind drivers of their responsibility (in particular, don’t call it “full self-driving” if it doesn’t drive itself, perhaps?), or more safeguards could be added to ensure driver attention.

The NHTSA is currently probing Tesla’s Autopilot system, and it looks like safeguards are what they’ll focus on – they’ll likely force changes to the way Tesla monitors drivers for safety purposes.

But then Musk goes on to suggest that not only are these accidents generally the fault of the drivers, but that he wants cabin cameras to be used to spy on drivers, with the specific purpose of wanting to win lawsuits or investigations brought against Tesla (such as the NHTSA probe). Not to enhance safety, not to collect data to improve the system, but to protect Tesla and his ego – to win.

In addition to this adversarial stance against his customers, the passage suggests that his initial idea was to collect this info without informing the driver, with the idea of adding a data privacy pop-up only coming later in the discussion.

Musk was not happy. The concept of “privacy teams” did not warm his heart. “I am the decision-maker at this company, not the privacy team,” he said. “I don’t even know who they are. They are so private you never know who they are.” There were some nervous laughs. “Perhaps we can have a pop-up where we tell people that if they use FSD [Full Self-Driving], we will collect data in the event of a crash,” he suggested. “Would that be okay?”

The woman thought about it for a moment, then nodded. “As long as we are communicating it to customers, I think we’re okay with that.”

-WALTER ISAACSON, ELON MUSK

Here, it’s notable that Musk says he is the decision-maker and that he doesn’t even know who the privacy team is.

In recent years and months, Musk has seemed increasingly distracted in his management of Tesla, recently focusing much more on Twitter than on the company that has catapulted him to the top of the list of the world’s richest people.

It might be good for him to have some idea of who the people working under him are, especially the privacy team, for a company that has active cameras running on the road, and in people’s cars and garages, all around the world, all the time – particularly when Tesla is currently facing a class action lawsuit over video privacy.

In April, it was revealed that Tesla employees shared videos recorded inside owners’ garages, including videos of people who were unclothed and ones where some personally identifiable information was attached. And in Illinois, a separate class action lawsuit focuses on the cabin camera specifically.

While Tesla does have a dedicated page describing its data privacy approach, a new independent analysis released last week by the Mozilla Foundation ranked Tesla in last place among car brands – and ranked cars as the worst product category Mozilla has ever seen in terms of privacy.

So, this blithe dismissal of the privacy team’s concerns does not seem productive and does seem to have had the expected result in terms of Tesla’s privacy performance.

Musk is known for making sudden pronouncements, demanding that a particular feature be added or subtracted, and going against the advice of engineers to be the “decision-maker” – regardless of whether the decision is the right one. Similar behavior has been seen in his leadership of Twitter, where he has dismantled trust & safety teams, and in the chaos of the takeover, he “may have jeopardized data privacy and security,” according to the DOJ.

While we don’t have a date for this particular discussion, it does seem to have happened at least post-2021, after the sudden deletion of radar from Tesla vehicles. The deletion of radar itself is an example of one of these sudden demands by Musk, which Tesla is now having to walk back.

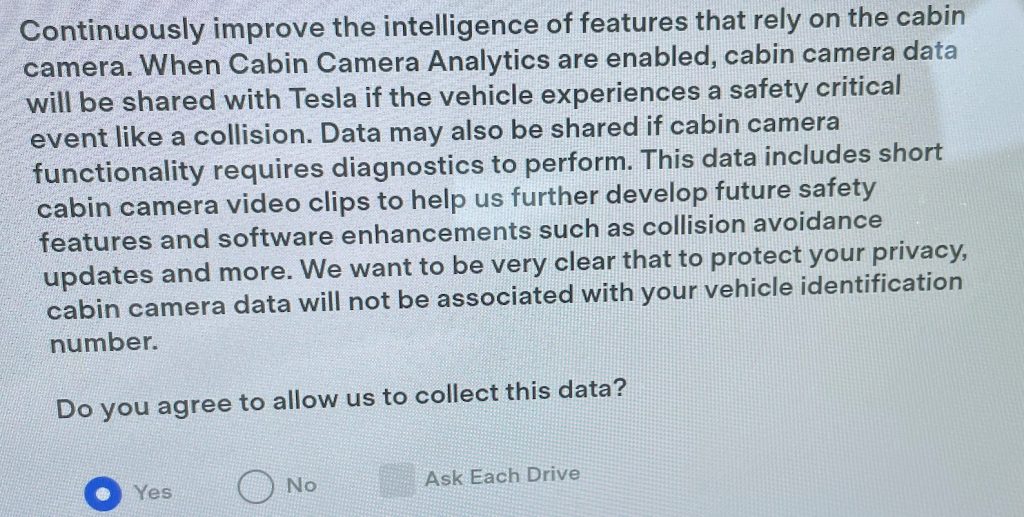

For its part, Tesla does currently have a warning in the car that describes what the company will do with the data from your internal camera. This is what it looks like currently in a Model 3:

Tesla’s online Model 3 owner’s manual contains similar language describing the use of the cabin camera.

Notably, this language focuses on safety rather than driver monitoring. Tesla explicitly says that the camera data doesn’t leave the vehicle unless the owner opts in and that the data will help with future safety and functionality improvements. But also says that the data is not attached to a VIN, nor is it used for identity verification.

Beyond that, we also have not seen Tesla defend itself in any autopilot lawsuits or investigations by using the cabin camera explicitly – at least not yet. With driver monitoring in focus in the current NHTSA investigation, it’s entirely possible that we might see more usage of this camera in the future or that camera clips are being used as part of the investigation.

But at the very least, this language in current Teslas does suggest that Musk did not get his wish – perhaps to the relief of some of the more privacy-interested Tesla drivers.